What is the TSCache?

Like all caches, the TSCache was designed to enable rapid access to a very large set of information as if that information was all available from directly accessible memory (RAM), but without requiring an unduly large amount of RAM in order to operate -- keeping all but the most recently used information on disk/SSD. Also like other caches, this cache enables this information to be accessed through a set of relatively smaller “keys”, hiding the complexity of managing this information and providing access seamlessly.

The TSCache (like some but not all caches) offers concurrent, thread-safe access.

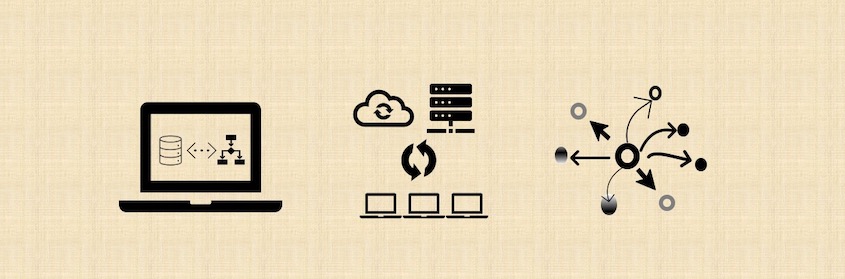

It may be “embedded” with an application executing on a single server or PC; or, it may be deployed in “client-server mode” (where multiple applications executing on other PCs or servers may access a common cache), or in “distributed” mode (whereby a single TSCache is replicated on multiple server instances providing for faster read access by a greater number of users and robustness in the event of server failure [i.e., replication]). It also offers restart capability (at a performance cost) and transactional capability (at a greater performance tradeoff).

The TSCache (like some but not all caches) offers concurrent, thread-safe access.

It may be “embedded” with an application executing on a single server or PC; or, it may be deployed in “client-server mode” (where multiple applications executing on other PCs or servers may access a common cache), or in “distributed” mode (whereby a single TSCache is replicated on multiple server instances providing for faster read access by a greater number of users and robustness in the event of server failure [i.e., replication]). It also offers restart capability (at a performance cost) and transactional capability (at a greater performance tradeoff).

How is the TSCache different from its competitors?

The TSCache offers 4 different types of interfaces for accessing data whereas competitors generally offer one. These interfaces are not just different ways of storing and accessing data, but provide for key functionality differences – e.g., multiple keys to a single data item and Big Data capabilities with multi-dimensional search. The different interfaces are discussed below.

The TSCache enables bulk operations that provide high performance when storing and retrieving multiple cached data items in a single call. These operations are often performed in parallel by this Cache -- providing unparalleled (if you'll forgive the pun) performance.

There are tremendous advantages that enable rapid concurrent access by multiple users, processes and/or threads. These advantages provide for extreme performance through the parallelization and concurrency aspects of the design and implementation of this Cache. Importantly: Provides for fair scheduling of resources when there are multiple processes/threads and full allocation of all resources (allocated cores) whether engaged by one or multiple processes.

The TSCache enables in-place modification of very large single data items (e.g., video or genetic sequences) thus obviating the need for retrieving and re-saving these items in their entirety during modifications.

The TSCache enables bulk operations that provide high performance when storing and retrieving multiple cached data items in a single call. These operations are often performed in parallel by this Cache -- providing unparalleled (if you'll forgive the pun) performance.

There are tremendous advantages that enable rapid concurrent access by multiple users, processes and/or threads. These advantages provide for extreme performance through the parallelization and concurrency aspects of the design and implementation of this Cache. Importantly: Provides for fair scheduling of resources when there are multiple processes/threads and full allocation of all resources (allocated cores) whether engaged by one or multiple processes.

The TSCache enables in-place modification of very large single data items (e.g., video or genetic sequences) thus obviating the need for retrieving and re-saving these items in their entirety during modifications.